NVIDIA and Amazon Web Services (AWS) have announced a groundbreaking partnership that will bring cutting-edge technology to the world of supercomputing and AI. The collaboration will result in the introduction of the Grace Hopper GH200 superchip-powered DGX Cloud, Project Ceiba for supercomputing, and the NeMo Retriever for AI language model optimization.

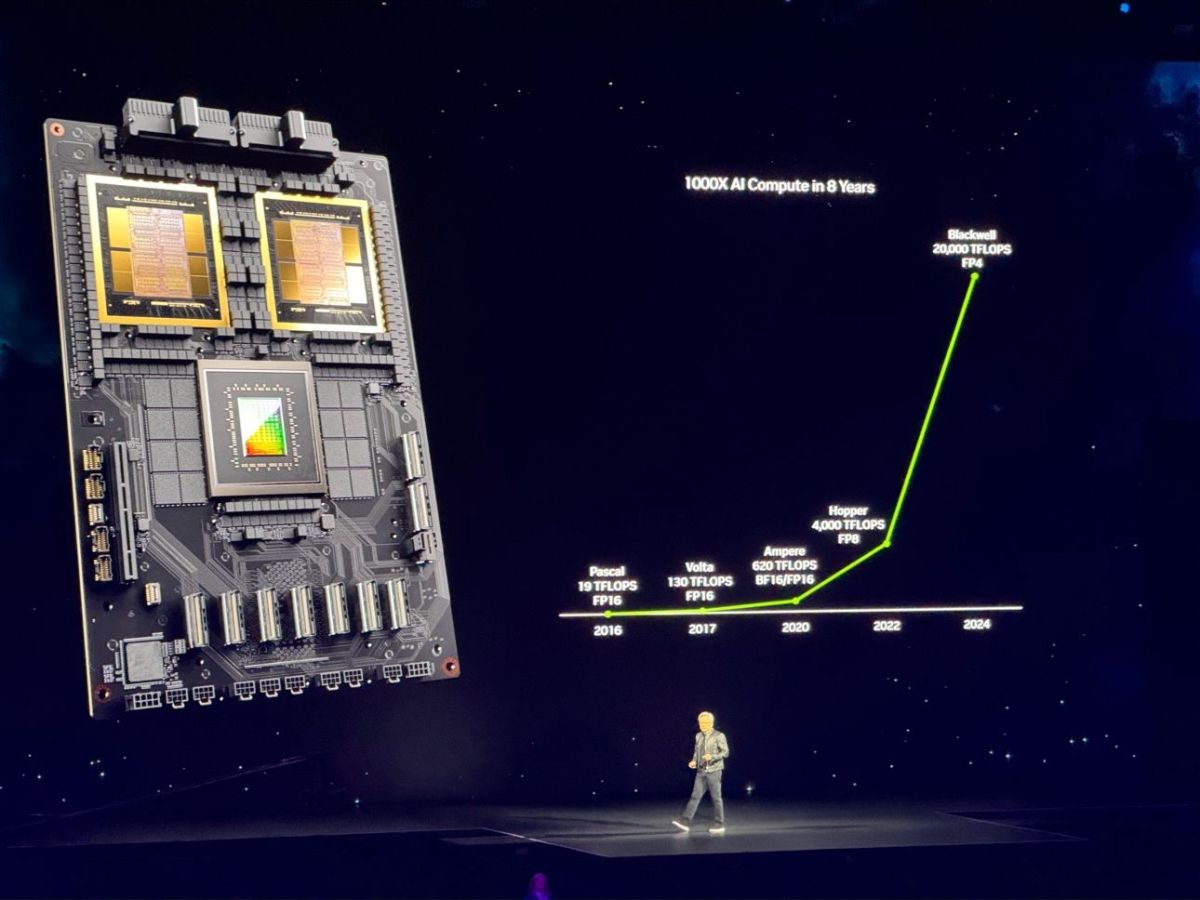

This powerful combination of NVIDIA’s expertise in GPU computing and AWS’s cloud infrastructure will revolutionize the way organizations approach complex computing tasks. The Grace Hopper GH200 superchip, in particular, is expected to deliver unmatched performance for data-intensive workloads, making it a game-changer for industries reliant on high-performance computing.

Project Ceiba, on the other hand, represents a significant leap forward in supercomputing capabilities, leveraging the power of NVIDIA GPUs and AWS’s vast cloud resources. This collaboration will enable researchers and scientists to tackle previously insurmountable computational challenges, opening up new possibilities for innovation and discovery.

Finally, the NeMo Retriever will provide advanced AI language model optimization, further solidifying the partnership’s commitment to pushing the boundaries of what is possible in the field of artificial intelligence. By harnessing the combined expertise of NVIDIA and AWS, the NeMo Retriever promises to enhance the efficiency and accuracy of AI language models, unlocking new potential for natural language processing applications.

Overall, the NVIDIA and AWS partnership is poised to usher in a new era of computing excellence, offering unprecedented levels of performance and capability to organizations across industries. With the introduction of the Grace Hopper GH200 superchip-powered DGX Cloud, Project Ceiba, and the NeMo Retriever, this collaboration is set to redefine the landscape of supercomputing and AI, setting new standards for efficiency and innovation.